AI-powered Kubernetes

Jan 27, 2022. 12 min

Machine Learning has proved to be a lucrative innovation, generating substantial profits for many organizations, and is expected to continue its upward trajectory in the future. Nonetheless, several challenges persist, impeding the successful integration of Machine Learning applications into production environments. One of them is “Concept Drift”.

In the context of machine learning, the term “concept drift” a.k.a. “nonstationarity“, “covariate shift” and “dataset shift” describes a phenomenon where the connections between input and output data in a given problem undergo changes over time. This means that the problem being addressed may evolve in a significant way, leading to potential inaccuracies and inconsistencies in the performance of predictive models. It is therefore important to monitor and adapt machine learning algorithms to ensure their continued effectiveness in light of evolving real-world conditions.

For example, consider a model that has been deployed to forecast the likelihood of a prospective customer purchasing a particular product based on their historical browsing patterns. While the model demonstrates a high level of accuracy initially, over the course of time, it begins to deteriorate markedly. This could be because the customers' preferences have changed over time.

The core assumption when dealing with the concept drift problem is uncertainty about the future. We assume that the source of the target instance is not known with certainty. It can be assumed, estimated or predicted but there is no certainty.Concept drift can occur due to various reasons, such as:

Model Learning modeling is technically approximating a mapping function say ‘f' on input data ‘X’ to make accurate predictions of the output value 'y' {y=f(X)}. For the cases, where the mapping function is static, meaning the relationship between historical and future data, and the relationship between input and output variables is consistent, the model may not require updation over time. But, in most real-world scenarios, this is not the case which results in the deterioration of the predictive performance of these models.

Some common changes are:

Before jumping on handling the drift, it is crucial to figure out the source of drift in data. Effective machine learning requires understanding the subtle distinctions between different adaptive learning algorithms, as certain algorithms may be better suited to addressing specific types of changes than others.

In simple words, Machine learning model monitoring is the process of keeping an eye on how well a model is performing once it has been deployed. It is an important part of the MLOps system, helping to ensure that the model remains accurate and useful over time. Following are the typical monitoring steps.

Once a model is active, and the production model monitoring system detects concept drift, it can initiate a trigger that signals any relevant changes, thereby prompting a process of learning. Depending on the problem, there are different methods for detecting concept drift.

These methods rely on measuring some metrics such as accuracy, precision, recall, F1-score, etc., and comparing them with some thresholds or baselines. If the metrics fall below or above certain values, it indicates that there is concept drift.

These methods rely on analyzing the statistical properties of the input data (features) or the output data (labels) and testing whether they have changed significantly over time. Some common tests include Kolmogorov-Smirnov test, Chi-square test, Kullback-Leibler divergence, etc.

Some examples of concept drift detection algorithms are:

There are many more concept drift detection algorithms available, and the choice of algorithm depends on the specific requirements and characteristics of the problem at hand.

Once the drift is detected, a new dataset needs to be prepared. Careful consideration must be taken on how to integrate new data into the model training. This way, the model can learn to adapt to changes over time and discard irrelevant information.

The Model requires to continually updating its knowledge with each new sample while utilizing a forgetting mechanism that helps it adjust to the latest concept changes to ensure that its predictions remain accurate and up-to-date. Depending on the problem domain, re-training the same model 'OR' designing a different model is required. Choosing a different model approach may be deemed suitable for domains where sudden changes are expected and have previously occurred, and can therefore be anticipated and verified. Retraining the same model is done generally on gradual concept drift.

Be ready with an evaluation strategy of a new model to perform error analysis. Depending on the problem domain and requirement, error analysis could be different and varies from case to case. Iterate the whole procedure once drift is detected. And finally automate everything as per the use-case, which should be the core function of any monitoring solution.

Let’s talk about a drift detection using technique ADWIN(Adaptive Windowing). It uses a sliding window approach to detect concept drift. Window size is fixed and ADWIN slides the fixed window for detecting any change on the newly arriving data. When two sub-windows show distinct means in the new observations the older sub-window is dropped. A user-defined threshold is set to trigger a warning that drift is detected. If the absolute difference between the two means derived from two sub-windows exceeds the predefined threshold, an alarm is generated. This method is applicable for univariate data.

Let's walk through a simple example in python using ADWIN library.

# Imports

import numpy as np

from skmultiflow.drift_detection.adwin import ADWIN

import matplotlib.pyplot as plt

adwin = ADWIN()

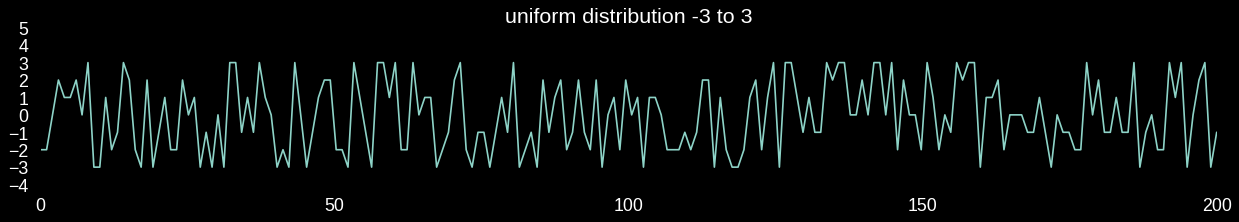

# Simulating a data stream as a uniform distribution of range -3 to 3

x = np.linspace(0, 200, 200)

data_stream = np.random.randint(low = -3, high = 4, size=200)

Data with uniform distribution

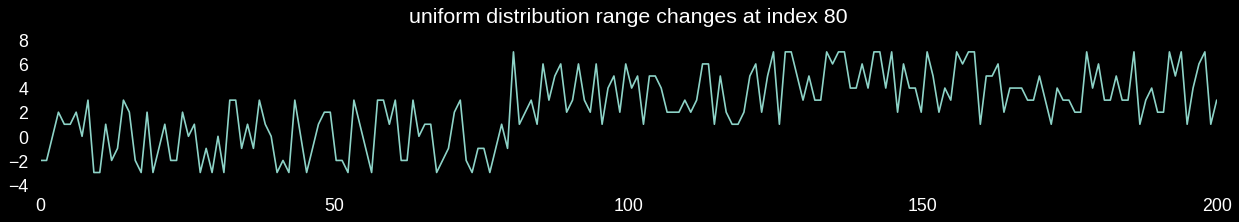

# Changing the data concept from index 80 to 200

for i in range(80, 200):

data_stream[i] = data_stream[i] + 4

Data with uniform distribution range change

# Adding stream elements to ADWIN and verifying if drift occurred

for i in range(200):

adwin.add_element(data_stream[i])

if adwin.detected_change():

print('Change detected in data: ' + str(data_stream[i]) + ' - at index: ' + str(i))

break

Change detected in data: 7 - at index: 97Concept drift is a widespread challenge in the fields of machine learning and artificial intelligence that requires careful attention and adaptation. It can have a significant impact on the performance and dependability of models that are implemented in dynamic environments where data undergoes changes over time. Various methods exist for detecting and addressing concept drift, but there is no single approach that is universally effective in all situations. As a result, it is vital to comprehend the root causes, various types, and distinct characteristics of concept drift and select the most suitable approach for each specific problem domain.

I hope this blog has provided you with valuable insights into concept drift. If you have any queries or feedback, please feel free to share them in the comments section below. Thank you for taking the time to read it!

Did you know that CloudAEye offers the most advanced AIOps solution for AWS Lambda? Request a free demo today!